Read time: 14 minutes

It’s strange to think that it’s already been a full year since I rather spontaneously decided to demand-test a premium newsletter idea I had.

It’s even crazier to think that this newsletter has since had subscribers from places like Google, Spotify, Salesforce, Lufthansa Airlines, YouTube, and plenty of small, AI-forward teams building fast and asking big questions.

So first: you’re amazing, thank you for being here to celebrate with me! 🎉

If you’re on the newer side here, I do my best to fill this monthly newsletter with content that aims to answer questions I get from many of you each month. That hasn’t changed since April 2024.

So this edition follows up on the #1 and #2 things people keep asking me about lately:

“How has Gemini 2.5 Pro performed in your analysis tests?” and “Have you tried DeepSeek for research tasks?”

There’s a lot of hype around DeepSeek and a lot of hope around Gemini 2.5 Pro (Experimental). But hype and hope don’t give you customer clarity. So I tested both using real-world analysis tasks researchers, designers and PM’s do all the time.

Plus…I want to give you a new way to think about AI benchmark tests (since none of them are built for our kind of work) and tell you about a promising new prompting technique that saves a lot of tokens.

Let’s get into it 👇

In this edition:

📝 My new favorite reasoning model for qualitative analysis - and one that’s worse at verbatim quotes than ChatGPT. Findings from April tests.

🧪 How I use AI benchmarks (even though none are made for us). Two benchmarks I pay attention to and what they tell us.

🧑🔬 A better alternative to Chain-of-Thought prompting? A new method, tested against CoT in a recent study, shows promise for saving tokens without losing accuracy.

WORKFLOW UPGRADES

📝 My new favorite reasoning model for qualitative analysis - and one that’s worse at verbatim quotes than ChatGPT

I’ve been getting more questions about how two models do analysis than almost all other AI topics combined.

There’s a lot of hype around DeepSeek generally, and a lot of hope around Gemini 2.5 Pro (Experimental). But I wanted to know: can they actually figure out what customers need? I tested how they:

Synthesize raw open-text survey data

Cluster messy interview notes

Pick through the nuances and contradictions in customer comments

Find the problems behind feature requests

Identify useful, real quotes to support an insight

…and a few other smaller subtasks.

What I tested -

Without giving away my exact test protocol (more on my test protocols in my AI Analysis course 😉), here’s what I tested in Gemini 2.5 Pro and DeepSeek R1 (in Perplexity):

Each model got a mix of long and short documents (interviews, surveys, comments, requests) and different formats

I used repeatable prompts—same task, same instruction structure

I deliberately tested out-of-order chunks from the data sets to see how each model handled non-linear input

The Top Level Results -

Gemini’s reasoning model felt cautious but dependable. It often listed fewer findings that I did from manual analysis, but all were highly evidenced. (High standards are usually a good thing, right?).

Meanwhile, DeepSeek’s reasoning model was willing to make up outputs just to finish the task.

Here’s what I mean - an example of DeepSeek going off the rails: It pulled out “observations” before I’d even uploaded any transcripts! 🤔

Here’s a breakdown and notes from my assessment of each model’s performance on a few sub-tasks.

Task Type | Gemini 2.5 Pro (Experimental) | DeepSeek (via Perplexity) |

|---|---|---|

Survey open text field synthesis | ✅ Accurate, consistent grouping of ideas in line with my manual version | ⚠️ Captured some nuance, but invented details within themes nearly 50% of the time |

Unique theme identification | ✅ Logical, well-separated clusters, an okay level of default granularity and a short list of only the highly evidenced themes were listed, must push for more. | ⚠️ Too much overlap of themes, no specific min. evidence threshold to call something a theme (ex: called a comment from 1 participant a pattern) |

Evidence gathering | ✅ Strong fidelity, real verbatim quotes nearly 100% of the time, correct attribution to participants. | ❌ Fake quotes and attribution errors constantly. Excessively referenced just a few participants/responses. |

Long input handling | ✅ Smooth handling, never ran into issues with token limits/uploading content even with transcripts from a 1.5hr long sessions. | ⚠️ Dropped mid-points in ~30-50% of transcripts and long survey data sets. Seemed only to read through parts of each document, and ignored the rest. |

⭐ Gemini 2.5 Pro (Experimental):

Context handling: It handles full transcripts in a single pass without breaking narrative structure. Very little evidence of early truncation or summary-mode collapse.

Completeness + depth: Few themes identified, seemingly due to focus on accuracy. Tends to give a combination of synthesized statement and verbatim quote when asked for “observations”.

Accuracy: Not just correct quotes—it resisted inventing insights altogether, even when pushed with vague prompts.

Drawback: Slightly too cautious for my style. Gemini tends to “hedge” when it’s unsure, which sometimes reads like passive language or vague generalities.

😲 DeepSeek via Perplexity:

Context handling: Uploads weren’t typically problematic, but it seems to review content too fast - no thorough review of all materials by default.

Completeness + depth: It picked up some subtle emotional and behavioral patterns that Gemini missed. But when it hallucinated, it did so spectacularly—assigning quotes to people who never said them, synthesizing quotes far more than using the real verbatim ones, and making up quotes and claims entirely.

Accuracy: Even with search turned off, DeepSeek referenced non-uploaded internet sources, and generated outputs before any content was uploaded—indicating background leakage from pre-training or search behavior. Not great.

Drawback: Everything? I’m not motivated to use this again (but I will, because repeated testing matters and I’ll try some new techniques next time).

In summary…a quick comparison to my 💕 Claude

Gemini 2.5 Pro (Experimental) is good but probably won’t replace my favorite LLM Claude just yet. DeepSeek isn’t even a contender.

The new Gemini reasoning model sticks faithfully to what’s actually in the data. But Claude is able to read between the lines of what someone says and determine what they might mean, even if it wasn’t explicitly said.

That kind of zooming out from the data is important for me a lot of the time, but might not be right for you.

⭐️ Tip: When choosing LLMs for tasks like Analysis, think about how you analyze customer input and what kind of “mode” you need from your AI - extra careful data faithfulness or more hypothesizing about the potential meaning that should be explored further.

AI FUNDAMENTALS

📝 There Are No AI Benchmark Tests for Customer Research Tasks. Here’s How I Use Them Anyway.

If you’ve ever wondered “Which LLM is best for my customer discovery tasks?” and Googled your way into a swamp of acronyms — you're not alone. The truth is: none of the standard LLM benchmarks test qualitative research workflows - so I mostly use benchmarks to check data analysis performance improvements.

First…What are Benchmark Tests?

AI benchmarks are scoreboards: tight, standardized tests that show how sharply a model performs against a fixed set of challenges.

They’re run by university labs, big tech, and scrappy independents so builders and buyers can cut through the noise and see what works.

BUT…a winning score only means the model crushes that particular use case tested, and does not guarantee high performance in every real-world scenario.

Two AI benchmarks I look at (and why)

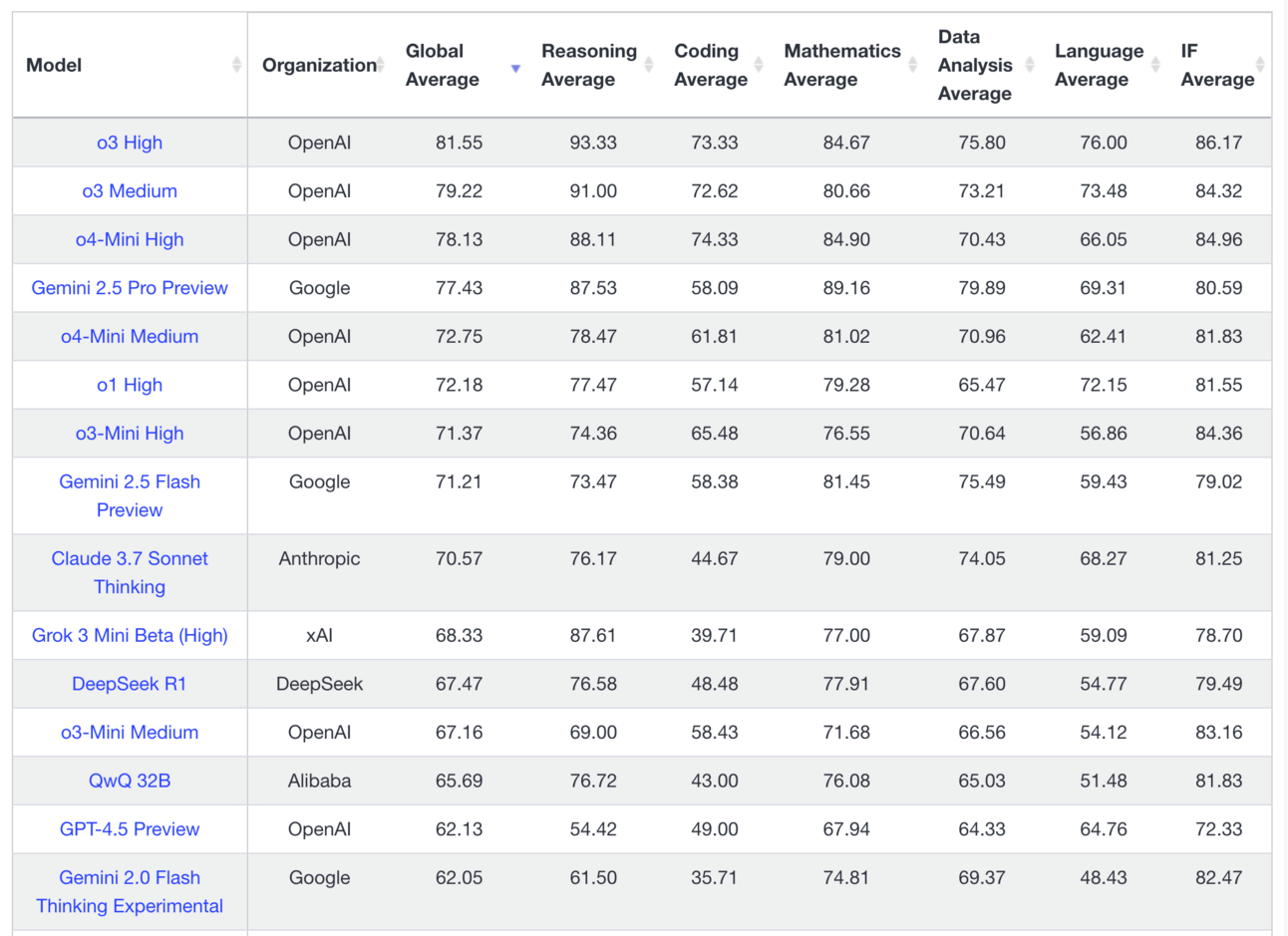

✅ 1. LiveBench — 2025-04-02 release

What it tests

18 fresh tasks each month—reasoning, language, coding, math, data-analysis, instruction following.

All questions pulled from brand-new news and arXiv papers, then auto-graded against ground-truth answers (no LLM-judge bias).

Why it matters

In particular, LiveBench tests models’ data analysis skills on the latest openly available datasets on Kaggle to see how they handle new data the models haven’t been trained on yet.

Latest signal (leaderboard Apr 2025)

Top tier now: Gemini 2.5 Pro for data analysis

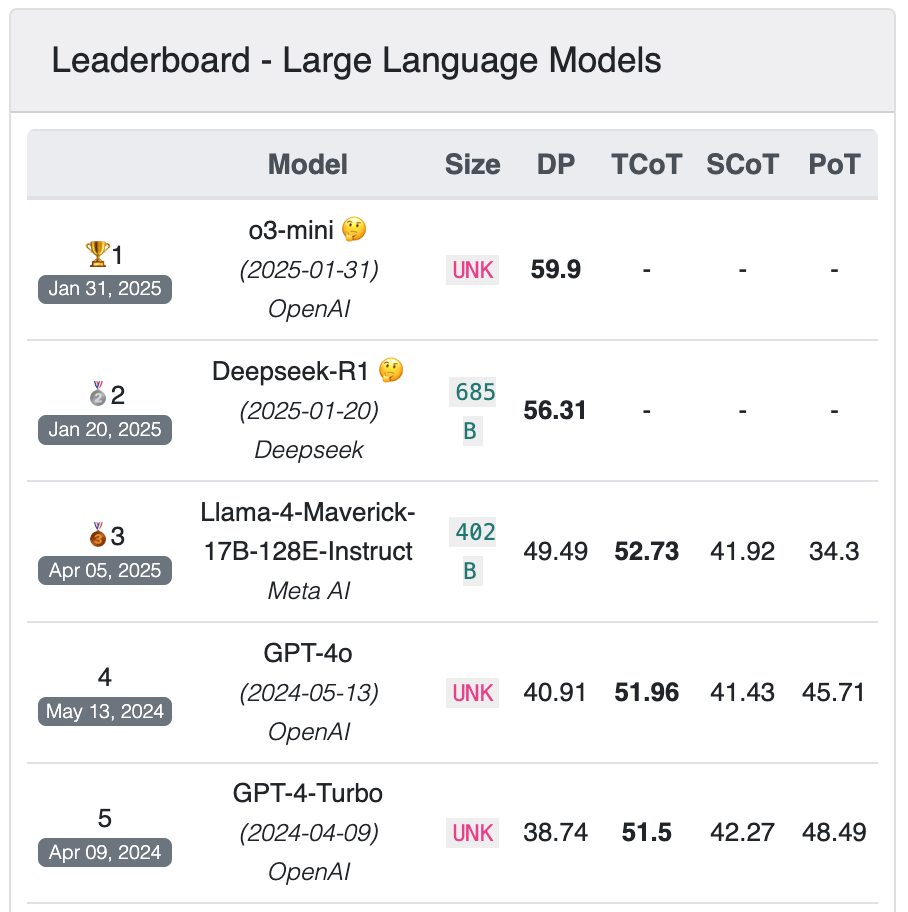

✅ 2. TableBench — refreshed 2025-04-18

What it tests

886 real-world tables across 18 sub-tasks (fact-checking → numerical & trend analysis → chart description).

Why it matters

Mirrors the day-to-day grind of reading crosstabs, pivoting spreadsheets, running quick stats, and explaining results in plain English.

Latest signal (leaderboard Apr 2025)

OpenAI models (o-series) still lead, with DeepSeek close behind.

How I use them

I keep it simple: I shortlist the top two models on each board for the tasks that I have that are most similar to what they’re testing (ex: data analysis).

Then I run a small pilot on my own data to see how they perform.

⚠️ Reality check:

In my own head-to-head tests, the top performers on benchmark tests aren’t always the best on my exact tasks. Their leaderboard performance often nudges me to test a model I wouldn’t have considered. Roughly half the time, that “dark-horse” ends up in my regular stack.

AI STUDIES

🧑🔬 A new way to prompt? Researchers wanted to know if “SoT” could beat Chain of Thought at its own game.

The TL;DR -

This recent study proposes a more efficient way for LLMs to reason their way to an answer — and it might just save a lot of compute while improving accuracy in some cases.

Instead of having the model "think out loud" in long, verbose steps (the now-standard Chain-of-Thought or CoT approach), this new method — called Sketch-of-Thought (SoT) — guides models to reason more strategically and concisely.

Think of CoT like your entire math homework when you were a kid. Every line and scratch-out included. SoT is like a clean outline of the key steps, focused only on the important stuff.

What the researchers did

They tested SoT across multiple types of tasks — multiple choice, yes/no, numeric, open-ended, multilingual, and even visual question answering.

Instead of measuring just output quality, they tracked the tokens used during the "thinking" phase (not the final answer).

They used a "router model" to decide the best reasoning style for a given problem — kind of like a mini-director helping the LLM choose the most efficient route.

Key Takeaways from the Study

Massive token savings: SoT used up to 76% fewer tokens compared to CoT.

More cost-efficient reasoning: Less token use = less compute = lower cost. Savings could reach 70–80% in complex tasks.

Same or better accuracy: Despite being shorter, SoT matched or outperformed CoT, especially on math problems.

Better adaptability: SoT worked across languages and multimodal inputs, not just text.

Why this matters:

If you're doing anything that involves complex LLM reasoning (like multi-step problem solving, multi-turn analysis, or synthetic customer interviews), token use can get expensive fast.

SoT shows there may be smarter, cheaper ways to get good outputs without paying for walls of token-heavy logic. Even better — it’s inspired by how humans actually think: jump to the good parts, skip the fluff.

How you might apply SoT principles in your own prompting:

You can’t plug in a "router model" without some additional setup, but you can try nudging models to reason more like SoT:

✅ Try: “Outline your reasoning briefly and directly.”

🧠 Try: “Sketch the essential steps needed to solve this.”

⚖️ Try: CoT vs SoT-style prompting: Compare the detail-heavy approach with a more concise, strategic version to see which one performs better for your task.

Basically, you’re guiding the model to be efficient on purpose — which is both a mindset shift and a useful cost-saving habit.

⚠️ A quick caveat:

This isn’t something you can fully replicate right now — the magic is partly in the router model used in the study, and that setup isn’t readily available.

Don’t throw out Chain-of-Thought just yet. The SoT principles are still useful even when using CoT: concise reasoning, focused steps, and task-matching strategies might help your chosen LLM stick closer to the facts, with a lot less fluff.

WHAT’S COMING NEXT?

Here’s what I want to answer for you in the next few editions -

What does it look like to have AI in an “end to end” customer discovery workflow?

Do we need to have the most expensive $200/month LLM tiers to get the best results?

and more 🤓

Or…tell me what YOU think I should include in an upcoming issue

» Email me «

See you in May!

-Caitlin