Read time: ≈20 minutes

👋 I’m excited about this one!

Yes, I’m in your inbox earlier than usual, because I want you to get inspiration before your brain turns off for the holidays.

Earlier this year, I’d done a handful of solo tests with AI Moderators, but playing both researcher and participant just wasn’t enough for me.

I wanted to run a real study to see how AI Moderator tools performed the job.

As you can imagine, my clients were a little bit hesitant to let me push AI moderators on their customers just to see what happens. 🥴

Thanks to many of you and an engaged LinkedIn community, I got 70+ participants to test 5 AI moderation tools.

As far as I know, this is the biggest semi-public independent study of AI moderators conducted! (If you know of another, please let me know).

In this edition:

👩💻 AI Moderators: What are they, how do they work, and what response quality do we get when they lead a customer session for us?

⭐ An update: I got a LinkedIn message that changed my January plans…

Let’s dig in 👇

WORKFLOW UPGRADES

👩💻 AI Moderation Tools: Is this a workflow upgrade, or is it just hype?

Ever wish you could get deeper user insights without booking a dozen calls or sending a giant survey? I certainly have!

If you’ve ever wished you could quickly gather richer user feedback—somewhere between a bare-bones survey and a time-consuming interview—AI moderator tools might fill that gap. Founders of these tools are upfront: in my conversations with them, they’ve told me that their goal is to help us scale the depth of qualitative insights without forcing us to run dozens of one-on-one interviews.

Let’s forget for the moment that some tools claim they have an “Interviewer” for us - I think that’s not the point of these tools. I’ll explain why.

Think of it as turning your research bicycle into an e-bike: in some of these tools, you still decide the direction and the little twists and turns of the route, but now you cover more ground, faster.

The tools I tested:

I experimented extensively with these tools - with some of my tests proving more valuable than others.

Wait, what are AI Moderators?

AI Research Moderation tools facilitate the collection (and sometimes analysis) of qualitative user feedback at a scale.

They combine elements of surveys and interviews to try to deliver richer insights from a larger number of participants, often in shorter timeframes.

Most of them work like this:

No, participants don’t talk to avatars! There’s only one tool I’ve found that uses avatars (and they’re really cagey, it seems like they’re only letting major companies sign up to use it).

Of the 24 AI Moderator tools I’ve found on the market today, most of them only let a participant give text input - very much like a survey that simply knows how to follow-up better based on what participants wrote

The subset of tools I tested mostly have options for participants to answer in a voice recording — I asked participants to use voice based on my guess that I’d get more data from voice recording (at the very least, we’re more likely to ramble when talking than typing with thumbs!)

They are built on the main Large Language Models (LLMs), like ChatGPT, Claude, and Gemini. More often than not, they use ChatGPT and have agreements with OpenAI not to feed your data back into OpenAI’s training. However I recommend that you double-check their privacy policy documents before adopting any of these tools

A few exceptions out there

According to chats with their founders, they’re not self-serve. These options are a bit like having an agency run the research for you, at a much lower cost because they’re setting it all up with you just right. You get to skip heavy investment in human hours for running live 1:1’s with 100’s of participants, but the setup involvement may be higher for you and these teams.

What kind of tasks do they help with?

AI Moderators can typically do these things -

Automated Questions:

Got a bunch of research goals but not sure how to ask the right questions? AI moderation tools can suggest smart, relevant questions. If you prefer to write your own, they’ll polish them so you’re not accidentally leading people down the wrong path.

Adaptive Interactions:

Instead of sending a static list of questions, these tools adapt in real-time. If a participant says something interesting (or confusing), the AI can dive deeper, skip irrelevant stuff, or clarify points—just like a human moderator would, but at scale.

Rich Data, Not Just Checkboxes:

Unlike basic surveys, these tools capture voice and sometimes video responses, giving you a richer, more human feel to your data. The AI then handles transcription and a sort of “analysis lite”.

Instant Syntheses & Insights:

You’ll spend less time combing through transcripts because the tool does the heavy lifting, pulling out common themes and top takeaways. They’re usually high level, but a good start for deeper dives. Many tools have a “chat” function for hunting specific answers and details, just like AI analysis tools.

Below are the four big takeaways I think you need to know from my AI Moderators experiment.

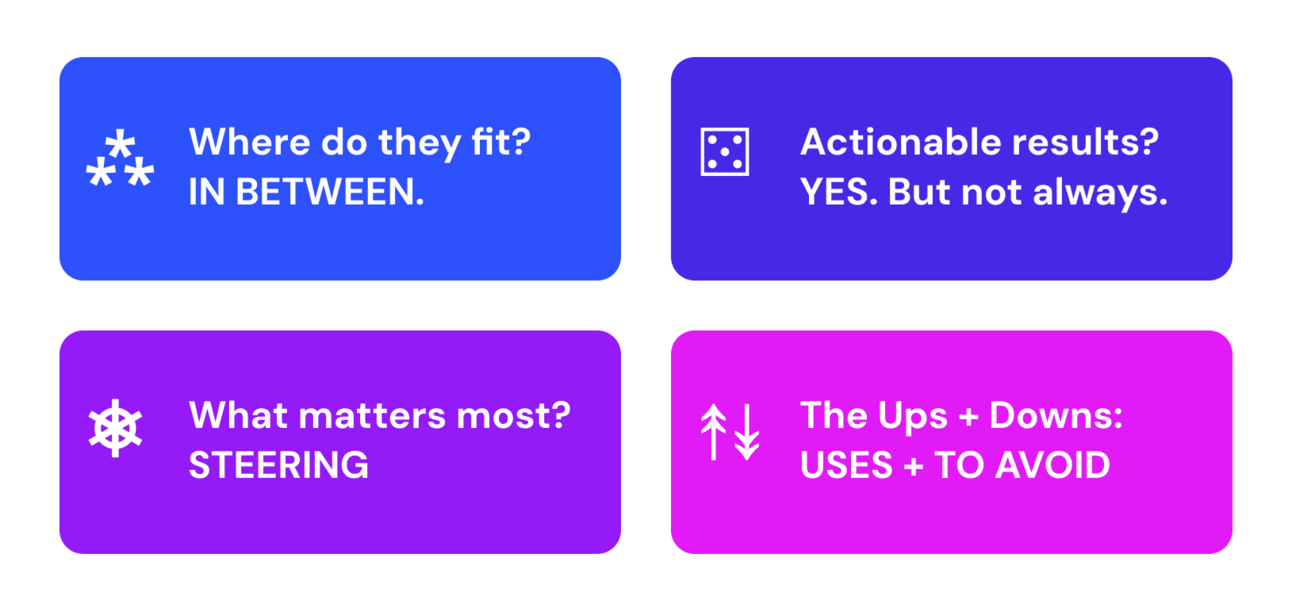

1. They’re a Middle Ground for Qualitative Research

What’s their level of depth?:

These tools shine when you need more detail than a multiple-choice survey but don’t have the bandwidth for round-the-clock interviews.

They’re best for an “in-between” space: you’ve run a few interviews or initial discovery sessions, and now you want to quickly confirm patterns or validate a hunch at scale. Instead of slowing down to recruit 20 more participants and spend days booking calls, you can reach 100+ people asynchronously.

Practical Tip:

Don’t think of these as interviews - think of them as very detailed surveys, that feels like a better comparison.

Use them after early qualitative findings, but before committing to a big product change.

Plug them in right after you finish a pilot interview phase, letting you scale insights to a bigger group with minimal extra work.

When to Use:

Between major research sprints to quickly validate new ideas.

When you need richer insights than a survey but don’t have time for 20+ interviews.

When you’re not sure yet where to best focus big, time-consuming exploratory research, but you know that surveys won’t tell you enough about the market

Here’s a walkthrough comparing a few different tools -

2. They Deliver Actionable Responses—But Not Always Perfectly

What’s the quality of responses here?

I found that these tools can actually surface useful feedback. In my AI adoption study, many participants provided thoughtful responses - as voice recordings - that helped me pinpoint the exact “why” behind their struggles or needs. I got far richer context than you’d get from a simple survey. I was genuinely surprised by responses much of the time. I also gathered more detail about use cases and contexts where they’re using AI than I have seen in survey responses (but with far less setup time here).

However, it wasn’t all sunshine and flawless answers.

Sometimes the AI missed chances to clarify vague or incomplete answers. It let irrelevant tangents slide without probing deeper. Instead of circling back, the moderator sometimes moved on, leaving gaps in the understanding where a human might have noticed and tried again to dig in.

Looking at transcripts, I sometimes felt like I was watching a junior researcher’s session, going “Noooo! You should have asked […]!” 🤦♀️

Here’s an example:

And an example of pretty good follow-ups -

Practical Tips:

If you spot a pattern of missed opportunities, try adjusting your initial instructions and Objectives, or running a small AI-moderated batch first to check how to best refine your instructions.

Don’t forget to check the FAQ’s and support documents from an AI moderator tool you choose to try, because most AI moderator teams give clear advice on how to give their specific tool the best possible instructions in the right format

3. Some Tools Let You Steer, Others Go on Autopilot

How Much Can You Control Follow-Ups? It Makes A Difference.

AI moderation tools are all a little different, and their follow-up questions mostly depend on a couple things:

How the tool was set up, and how good the prompting and fine-tuning is in the background

Whether the user is able to add instructions for follow-ups and where to focus

Some let you specify exactly what to probe on—maybe you tell the AI, “If someone mentions onboarding difficulty, ask for a specific example.” Others let you decide how many follow-ups to give before moving on. But a few are completely hands-off, expecting you to trust their logic.

After seeing AI-led sessions veer off track or miss key details, I personally preferred the tools that let me guide the conversation. Why? Because control over follow-ups helps ensure you’re not stuck with irrelevant or shallow data. When we’re talking about qualitative insights at scale, I certainly don’t want 100 sessions to give me answers to questions I didn’t even care about. 🫤

I will say I was still positively surprised by the relevance of follow-up questions in the tools that don’t enable follow-up instructions (Usercall, Juno, Tellet). They still did a pretty good job most of the time. But overall, the relevance of follow-ups was higher in the tools where I could tell it what to follow up on (Versive, Wondering).

Practical Tips:

Test a tool that lets you define follow-up criteria and compare it to a “hands-off” one.

Consider choosing AI moderators that enable you to give some instructions for follow-up questions, and/or don’t have a cap on how much detail you can add in the initial Objectives or Research Questions in the study setup. Across the board, we see that AI needs more context and guidance to determine what we believe is useful and not.

When filling out follow-up instructions, phrase directions in specific text that doesn’t “overwhelm” the AI - think short, clear sentences and ≈3 lines of text. That worked best for me across the tools that enable follow-up guidance.

4. The Ups + Downs: Participants Aren’t Always Thrilled (And It Probably Depends A Lot On Context)

I had 1:1 chats with ≈40% of the participants in this study. But remember: my participants were mostly from my LinkedIn community, not my “customers”. They weren’t starting out frustrated or underserved by me; they were generally curious and open-minded about this experiment. And yet…the feedback was still mixed. Here’s why -

What’s the Participant’s Experience Like?

Some participants in the study appreciated the convenience - being able to participate when it fit their schedules. Many were positively surprised by the relevance of follow-up questions - cool! 🎉

But many others disliked getting repetitive questions, canned responses (“good point”), or abrupt endings to a conversation topic or the full session. 😧

Without human empathy or adaptability, these tools can sometimes feel too impersonal—I imagine this is especially true in sensitive or trust-heavy contexts. (But this study wasn’t one of those cases, so I can only hypothesize here). They’re not built for emotional intelligence (yet), so use caution where extra empathy is key.

Practical Tip:

Have a team discussion about which current parts of your customer experience you could give to an AI moderator. Think: Where you probably need deeper responses than a survey gathers but where no customers will be upset that they’re getting handed off to a bot

Consider a human-led debrief step in combination with AI moderators—maybe a follow-up email with the customer offering a human contact for extra support.

Don’t forget to consider situations where your audience might not have a great internet connection - that seemed to play a big role in whether sessions completed or cut off early. It also seems to affect the quality of AI’s follow-ups—if AI didn’t fully understand a voice recording, obviously it can’t follow up well (neither can humans!)

Which Cases you CAN Use AI Moderators For:

For lower-stakes projects where high empathy isn’t critical.

When fast feedback outweighs the need for a personal touch.

Where you are fairly confident you know which topics will come up and that an extra human follow-up about them won’t be needed

Which Cases NOT to use AI Moderators For:

Avoid them for emotionally charged topics or complex, high-stakes research.

Examples you might consider avoiding: churn, incomplete onboarding, following up frustrated feedback in NPS or other…

Here’s some of the participant feedback I heard:

“It was an awesome experience. The AI was really pressing me for answers, which was great. However, I think 🤔 it still lacks some touch of humanity, so it could capture respondent sentiments as they are going through the process. I guess what I’m trying to say is it still needs some more training to figure out whether the interviewee is already feeling negative, for lack of a better word.”

“I am so impressed that it followed my talking so well and processed it so fast. I could almost take interview tips from how it was rephrasing my replies.”

“I found the voice response to be quite enjoyable and definitely more fluent than stopping to provide text based answers. The follow-up probes were perhaps a bit repetitive in places and quite 'UX-style' in terms of pushing for a specific example/ case of a wider point made prior - a pattern that I started to anticipate as a respondent. But I can see why that leads to richer outputs.”

“I found that some questions were a bit repetitive (especially those about frustrations related to using AI in the research process).”

“Oops, it cut off at 14 minutes, that's normal, right? could it be the question I asked back, or is it my connection?”

〰️

Disclaimer: I got extended trials and free credits for this experiment, but I didn’t get paid by any of these tools to include them. There’s no intentional promotion here, I’ve tried my best to remain objective. ✌️

〰️

But wait, this isn’t the end!

I don’t think there can be a single test of AI Moderators that we can leave and never return to. If you still have a question about how they work and what to do with them, please send questions my way - there will be another test in 2025!

I also plan to test a few of the AI moderation tools where the teams behind them are offering more of a concierge service for setting up everything the right way.

The tools tested in this round were “self-serve” - I’m very curious to compare concierge/agency-style with the self-serve model next time.

SOMETHING NEW

⭐ An Update: Building Something New

I opened LinkedIn recently to a DM from the CEO of Maven. 😳 He asked if I’d considered creating a course on AI for Customer Research. Well yes, I have!

I’ve spent 2024 running this newsletter and custom team training on AI.

Now, I’d like to help more people solve AI adoption challenges in more depth.

Maybe this sounds like you…

You want quick, practical ways to weave AI into your research in as little time as possible - it’s why you’re here.

But you sometimes wish you had everything in this newsletter and more to power through in just days to finally feel you’ve caught up, dug deep and leveled-up.

If that 👆 sounds like you — I’d love to know more about your current AI challenges.

The button below links to a survey (it’s fast, I promise!).

And newsletter subscribers will get a discount 😏

Sending you my warmest wishes for a time of year that’s cozy for some and challenging for others. I hope this edition serves as your much-needed inspiration or happy distraction to finish the year.

See you in 2025!

Caitlin