Read time: ≈16 minutes

Happy Autumn!

If you’re at all like me, you might be trying to get AI to take over the tasks you’re not as excited about.

Can’t AI replace my hours of number-crunching for me?

I work as a mixed methods researcher and advisor, but I’m naturally more interested in qualitative work. When I get questions about whether we should use AI to run quantitative analyses, I hesitate a little bit. Because if I’m honest, I’m hoping AI can do most of my quant work soon.

But is that possible, and is that a good approach that I would advise others to follow?

This month, I’ve run some quantitative AI analyses and collected considerations for AI quant work that I think you should know.

On the opposite end of the spectrum from more math-focused research is probably reading humans’ emotions. I repeatedly see arguments made about AI not being on the same level as humans because “AI doesn’t recognize emotions like we do”.

But is that accurate? A recent study on “Emotion AI” has answers…

Plus, a big model update, and some interesting tools.

Here comes the September edition -

In this edition:

🧐 AI Analysis Part II: Quantitative. How much can we trust AI for quant work? My considerations for AI in quantitative analysis tasks.

⏩️ Copy-paste Quantitative Analysis Prompts. A few prompts I used in testing, and that AI suggested I use for better results.

😃 Can AI recognize emotion the way humans can? A recent study suggests that it can.

📊 This AI data analysis study I referenced while testing this month might change your mind about how much we can trust AI for quant tasks.

📰 AI News. Open AI’s latest model can reason better than ever (and apparently better than Ph.D.’s). Learn why that matters.

Plus…a few tools I’ve been playing with. Keep reading! 👇

WORKFLOW UPGRADES

🧐 AI Analysis Part II: Quantitative

Based on questions I’ve received, many of you wonder how much we can trust AI to do quantitative tasks for us - sometimes to fill gaps in our own skill sets. Some of you have asked -

“How well can AI do the data analysis for someone who is stronger on the qualitative side?”

“Can I trust it where I might not have the confidence or even the time to do certain quantitative analysis tasks?”

My verdict: Julius AI and ChatGPT-4o handled the quantitative tasks particularly well and ran analyses much faster than I could. However, they become more reliable when given clear guidance on which data to use and how to analyze it, rather than being left to choose directions and make decisions on their own.

What I tested

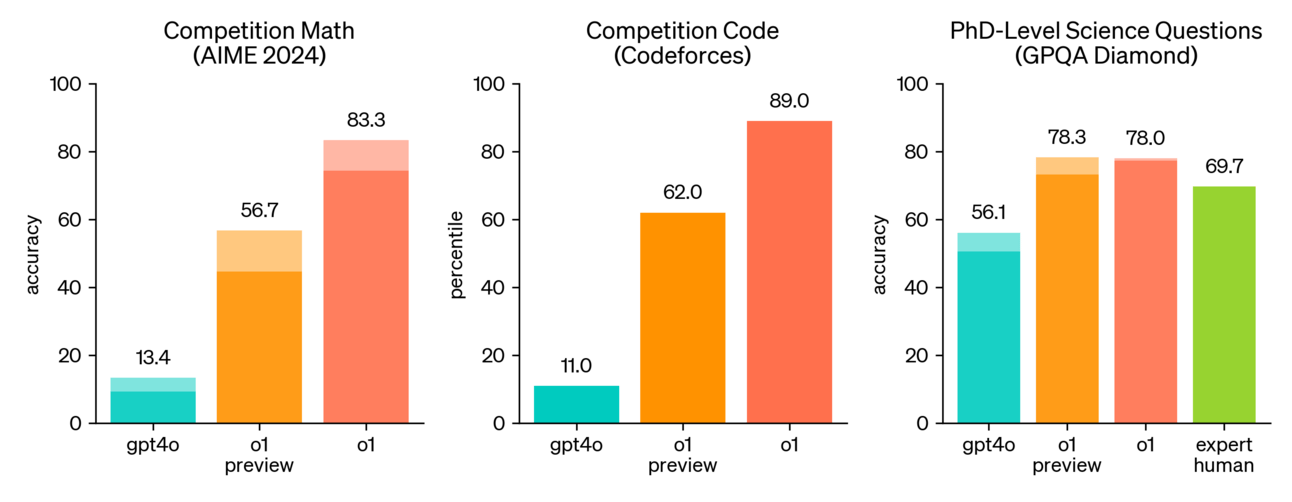

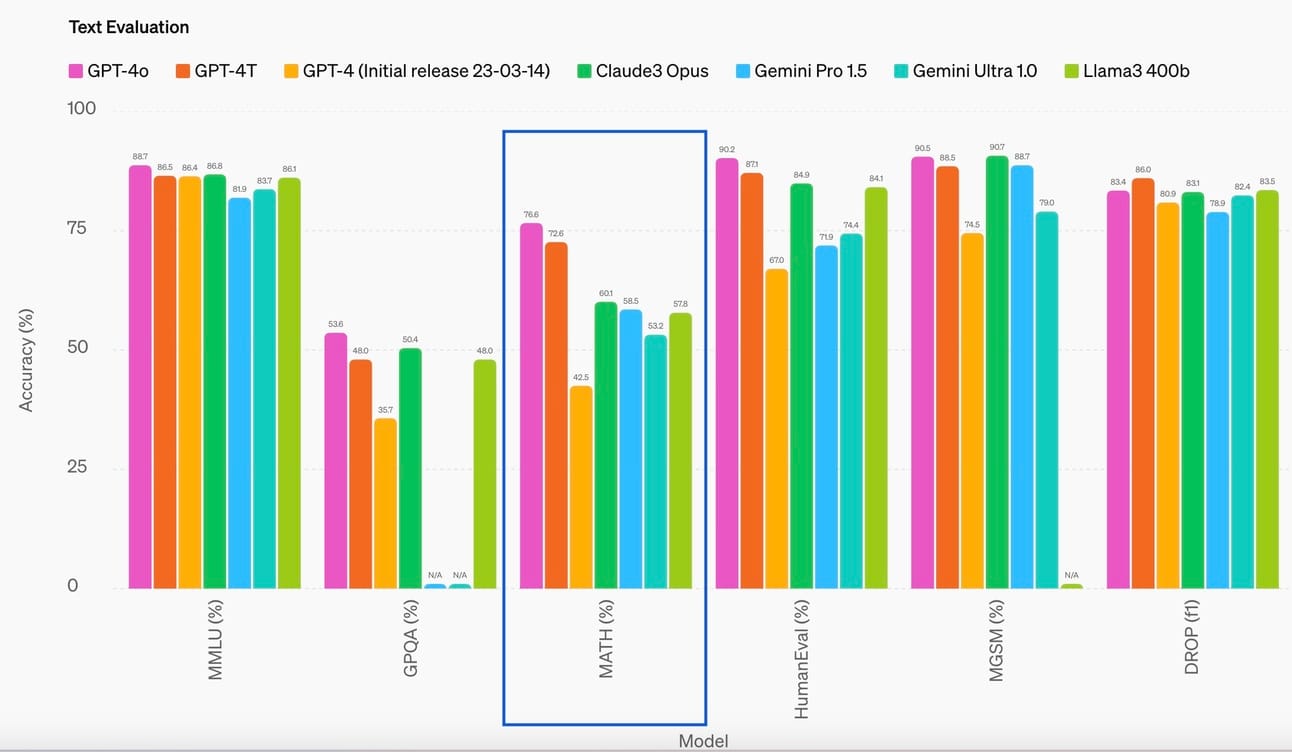

Unlike the many qualitative research platforms, there are few focused on quantitative analysis. I tested 4 platforms using 3 models, with one platform using the three major LLMs. Here, I focus on ChatGPT and Julius AI, as ChatGPT has excelled in benchmark math tests (see the graph below) and data analysis studies.

I didn’t find any real inaccuracies in my testing, but in some cases, the models made strange or incomplete choices about handling the data, which I had to correct.

Julius AI made it particularly easy to spot and correct AI’s unusual choices by more transparently showing its step-by-step analysis process.

For reference, math-related benchmarks suggest how reliably AI models can handle quantitative tasks. So far, GPT-4o has performed best in math evaluations, though it's still only at 76.6% accuracy 👇 When performing data analysis tasks specifically, accuracy levels are seemingly higher, according to this study.

Thinking of using AI for Quantitative Analysis? Here’s where I’ve seen it do best:

Simple pattern recognition, like finding correlations in data

Profiling: identifying patterns that enable creation of segments in a population or customer base

Data visualization: when you know how you want the data analyzed but need faster visualization options than traditional tools

Using Python to label, categorize, clean, or group data differently for alternative analyses, plus other time-consuming data preparation

Checking your analysis and calculations for errors, like a proofreader

Automating manual analysis or calculations that are time-consuming and prone to human error

〰️

I ran analyses of 4 different data sets -

My proprietary survey of business owners (run in 2022)

My proprietary survey of people in design professions (run in 2023)

The World Happiness Report data set from 2015

The World Happiness Report data set from 2019

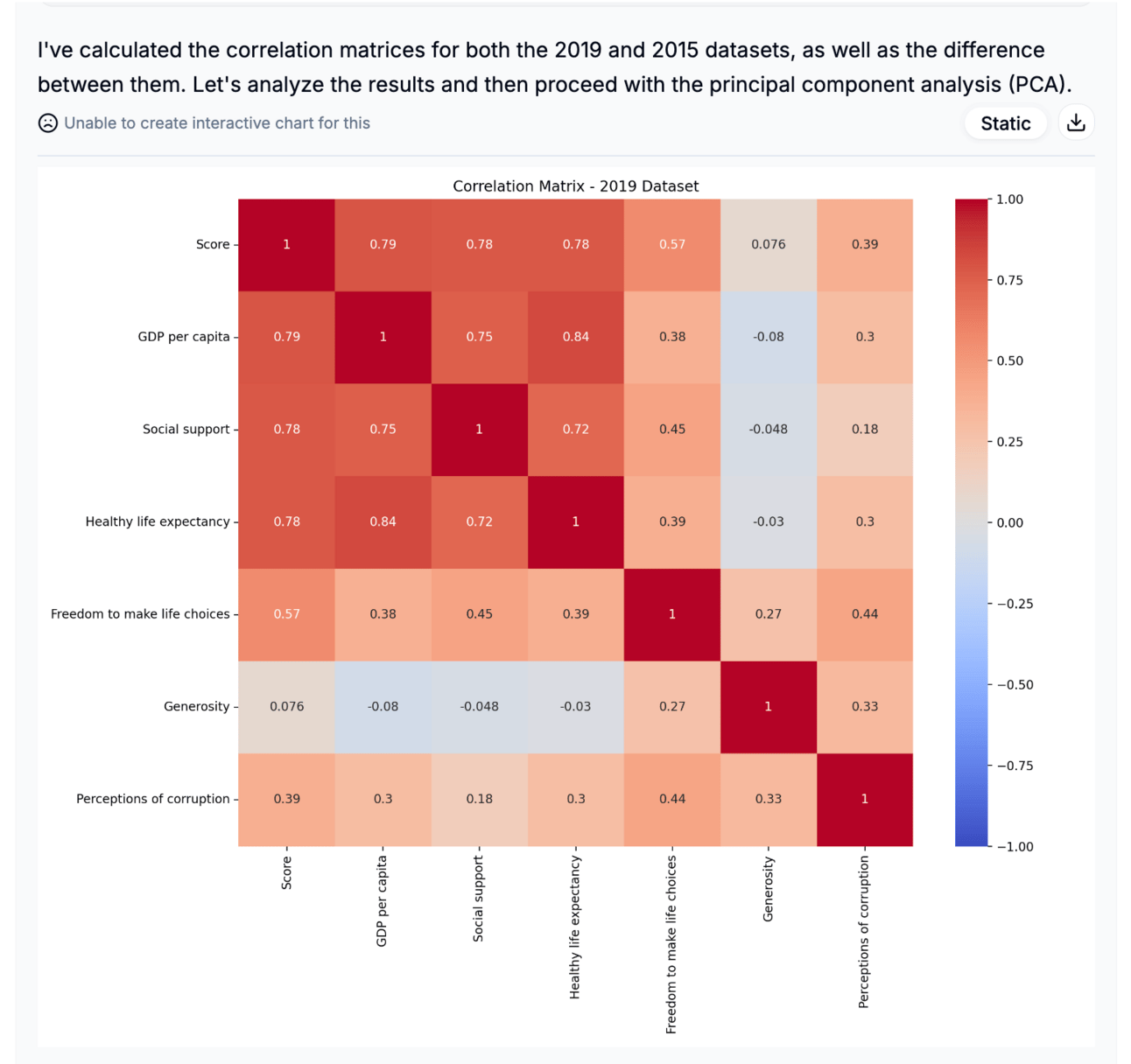

An AI-generated correlation matrix using on World Happiness Report data from 2019

Findings & Considerations

AI can do your quant analysis, but don’t get in over your head

If you're not experienced in stats, avoid relying on AI for analyses you can't verify. Some AI models are decent at math, but you should always check AI’s work, especially for complex tasks. It’s not anywhere close to perfect, according to performance benchmarking tests. GPT-4o has the best accuracy score for math problems at 76%, versus top expert humans’ accuracy around 90%. Hallucinations still happen, though, and you never know when they might.

—

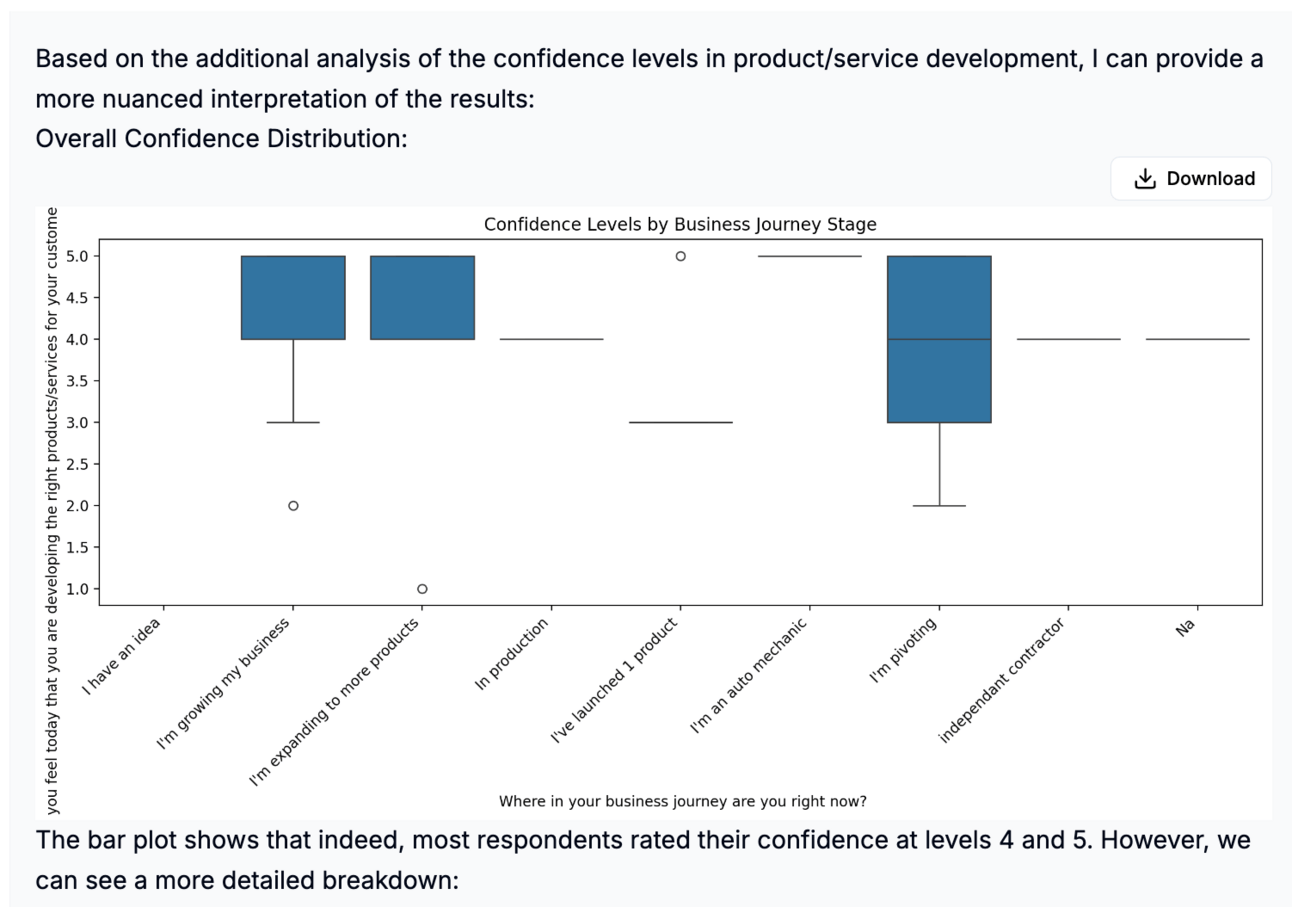

AI is fast but can miss nuances and reliability issues

AI is great for quick analysis and visualizations but often misses subtle insights. For example, it might take survey responses at face value and present calculations as fact, without considering if participants are misrepresenting themselves, like showing overconfidence. While this isn’t always crucial for useful insights, it’s worth considering depending on your data and goals.Example: In one of my surveys, participants seemed to overestimate their knowledge of product development, with many rating their confidence a 5 on a 1-5 scale, saying that they were certain they were developing a product customers would value. At first glance, it seemed they had no issue knowing what to build for customers. However, AI didn’t mention possible respondent bias or overconfidence in its analysis.

Additionally, none of the models flagged unreliable data, like biased survey questions, until I specifically asked for a bias assessment.—

AI can adapt quickly and save time, but check that it adapts correctly

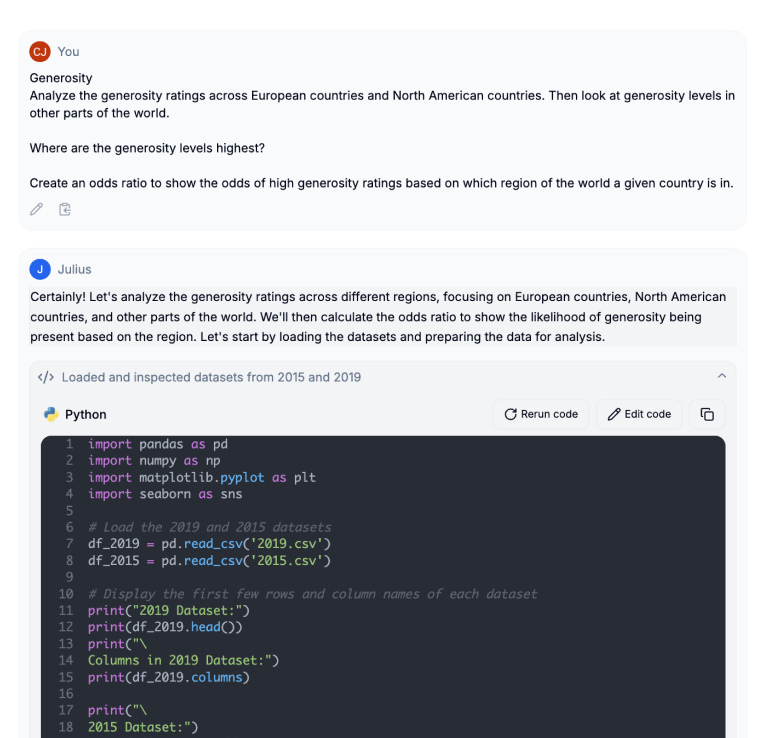

AI may attempt an analysis even when the data isn’t formatted correctly. For example, Julius AI tried to create an Odds Ratio using data that wasn’t suitable, initially using an inaccurate proxy. After I provided feedback on how to work with specific relevant parts of the data, it improved and generated an accurate odds ratio. Always double-check AI’s data manipulation before trusting its final analysis output.

—

AI can make odd data categorizations

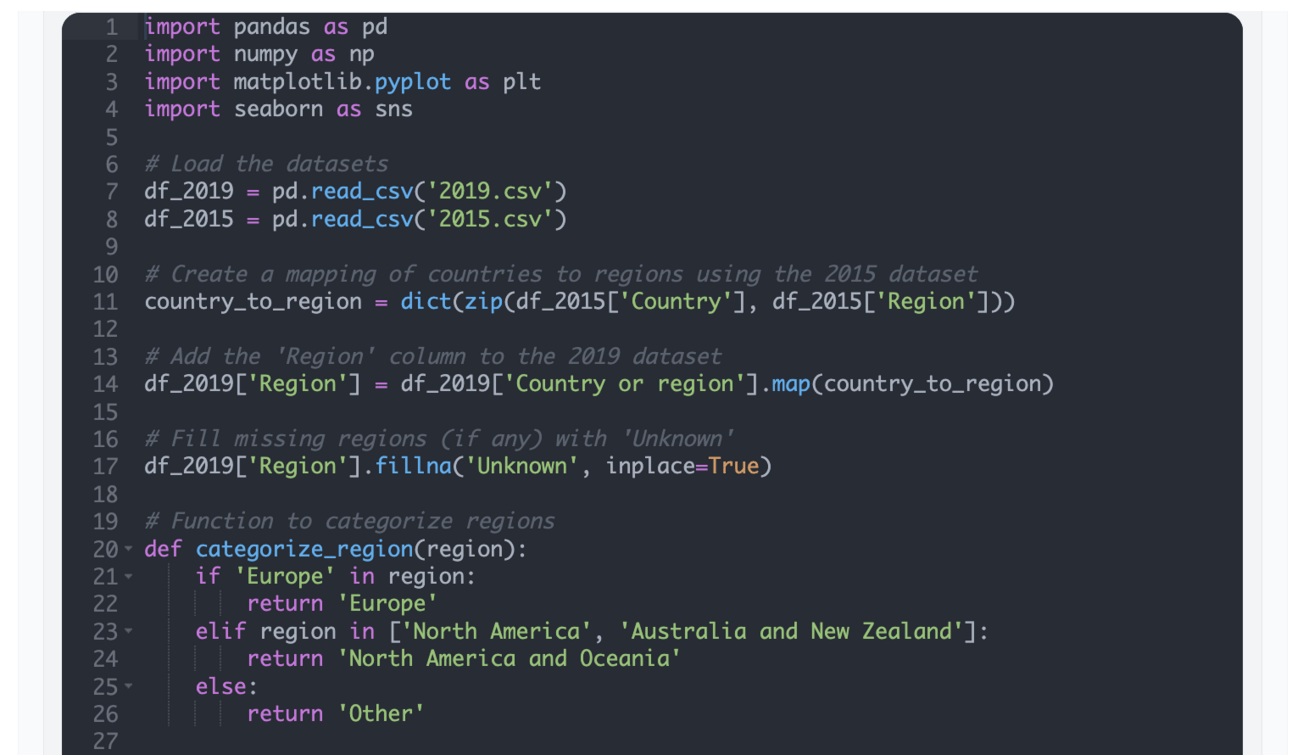

AI sometimes creates unusual groupings. In one test with World Happiness Report data, Julius AI - using ChatGPT-4o - grouped the U.S., Canada, Australia, and New Zealand into a region called "North America and Oceania." (ChatGPT and the other models tested in their own environments did not). This error was easy to catch by skimming the Python code, but more complex mistakes could be harder to spot.Example: I used two data sets from the World Happiness Report to analyze “generosity” across regions. The data didn’t include a Region column, so AI fetched a Python library to match countries in the data set to geographic regions. However, it combined Australia, New Zealand, the U.S., and Canada into a region called “North America and Oceania” without explanation. Firstly, I requested that it focus on North America and Europe for the analysis (not Oceania). Second, that’s not a commonly used geographic region…

My request to Julius AI and it’s first step with Python

Julius AI’s analysis step showing the creation of a new world region 😄

—

Visualizations can be useless unless you tell AI which parts of the data to use

When I let AI decide which parts of my data to visualize, it frequently generated graphs that weren’t helpful. Example: One model created a confidence interval analysis for my survey, but it included free-text responses from the “Other” answer option alongside multiple-choice answers on the X-axis. The result was a messy, confusing graph that I couldn’t use as-is.

When I got clear on the type of graph I wanted first, and specified the exact columns and responses to use from my data, AI consistently delivered what I needed much faster and very accurately. Tip: Imagine what kind of visualization and output you’re looking for, and back into how to ask AI to create it. It sounds obvious, but I’ve seen too many people jump into AI for analysis and let it do the thinking for them.

AI can prepare and format data much faster for diverse analyses

AI is proactive in some helpful ways, such as automatically renaming mismatched column headers when merging datasets. This saved me a lot of time and ensured consistency in the analysis across different reports. It’s something that would have taken me significantly longer as a non-expert at Python.

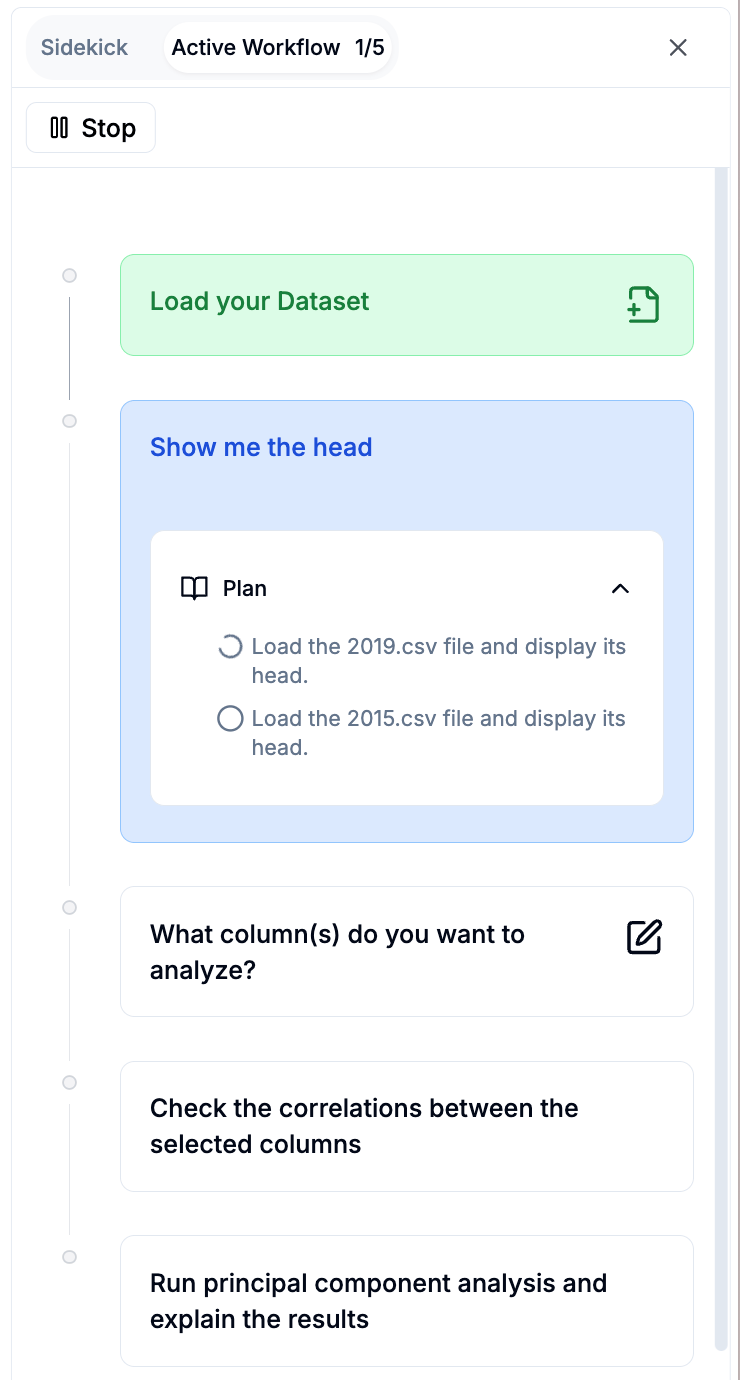

A tool that offers more transparent step-by-step analysis can be better for less experienced users

Many of us struggle to understand how AI reaches analysis conclusions. I found Julius to be more transparent than ChatGPT for showing it’s “thinking” process. It explains its process step-by-step, including showing the Python libraries and code used, allowing users to review and edit steps to improve accuracy and relevance as an analysis runs. It runs on the major models (ChatGPT, Claude, Gemini, Cohere), but offers its way of interfacing with the process.

Your tool choice may subtly influence your analysis approach

Different tools guide your analysis in different ways, possibly without you noticing. Julius AI prompts you to pick from common analysis types immediately, which can limit broader exploration. In contrast, ChatGPT starts with general suggestions like “identify top trends” or “compare data sets,” starting in a more open space. This helped me uncover interesting insights in the World Happiness Report data using ChatGPT that Julius AI didn’t catch, because Julius steered me toward specific methods that weren’t always as useful.

📚 In my process, I supplemented my own testing with reading existing studies on data analysis to understand their results. See one particularly relevant one in the Studies section.

How I ran my tests -

I uploaded my data sets to the AI platforms, testing on ChatGPT, Gemini, and Claude models, plus Julius AI’s model and others on it’s platform.

I began by letting each tool suggest potential analyses based on the uploaded data.

Then, I instructed them to run specific tasks, like descriptive statistics, frequency tables, odds ratios, correlation matrices, and histograms. I came up with a list that included analyses that were more and less appropriate for the given data sets.

For clarity, none of the platforms sponsored this testing; I paid for access to each platform’s best models and tools to complete my tasks.

PROMPTING PLUS

⚡️ Copy-paste Quantitative Analysis Prompts

A few of the prompts I experimented with in the testing above, plus prompts that the AI platforms suggested I use for my surveys. I reverse engineered and tested them to make sure they worked consistently in various scenarios with multiple data sets in ChatGPT.

Prompt: Provide a summary of the dataset, including the count, mean, standard deviation, min, max, and quartiles for all numerical columns.

Prompt: Which factors are most correlated with [variable] in the dataset? Summarize how these factors related to [variable].

Prompt: Analyze changes over time if the dataset contains multiple years. Which [segment] had the greatest change to the value of [variable], and what factors contributed to these changes?

Prompt: First, show a correlation matrix between all numerical variables to identify strong and weak relationships between the factors in the dataset.

Then, determine whether there is a statistically significant relationship between [variable 1] and [variable 2].

Prompt: Identify any outliers in the dataset where [variable] is unusually high or low compared to the average. Tell me what you interpret about the outliers.

AI STUDIES

😃 Can AI recognize emotion the way humans can?

The short answer? So far, YES.

Researchers from the Karlsruhe Institute of Technology (KIT) and the University of Duisburg-Essen have successfully used body language to identify the emotional states of tennis players during matches. They trained their AI model using data collected from real tennis games.

What matters here:

The AI model equals human emotion recognition ability.

It’s emotion-recognition accuracy of 68.9% doesn’t sound like much, but it matches human ability.Machine Learning models and humans both more effectively recognize negative affective states (emotions).

The theory about why is that negative emotional states and expressions are more intense, and therefore potentially more straightforward to recognize.

My interpretation: we can likely use AI to recognize friction and problems in a user’s experience - such as in product tests - about as well as a human can. 👀

〰️

😃 Is GPT-4 a Good Data Analyst?

I re-read this study from one year ago while running my own quantitative analysis testing for an additional perspective that I hope helps you, too.

Study 2: Is GPT-4 a good data analyst?

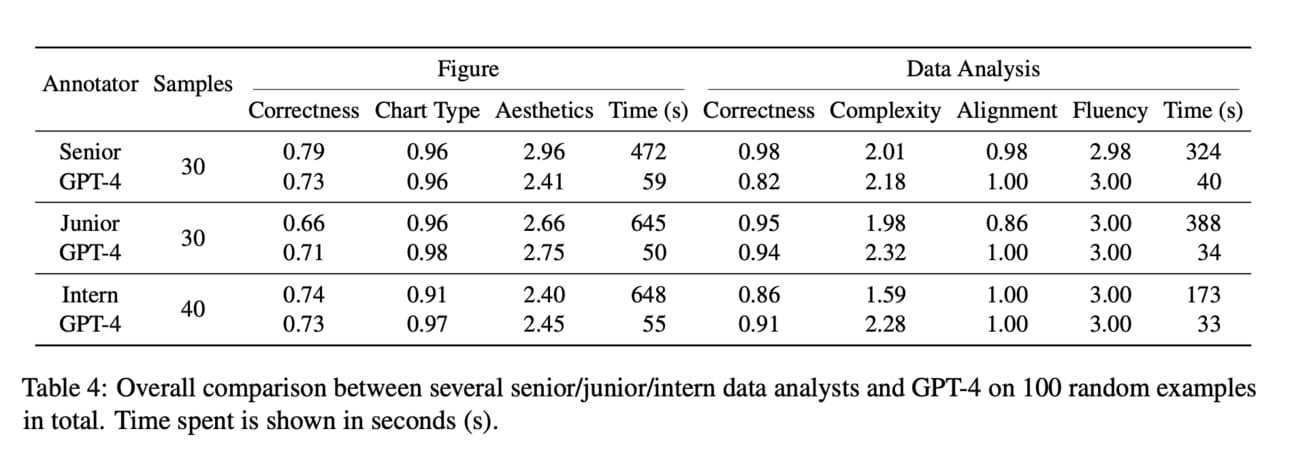

Researchers from the DAMO Academy at Alibaba Group tested GPT-4’s ability to perform data analysis tasks, comparing its performance against human data analysts of multiple experience levels. They evaluated GPT-4’s ability to extract data, generate visualizations, and provide insights using a range of databases and questions.

What matters here:

The biggest performance difference was on the correctness level of analysis (and humans win).

Senior Data Analysts received a correctness rating of 0.98 out of 1 (near perfect), while GPT-4 scored 0.82. While 0.82/1 is still a high score, there’s a noteworthy difference between the scores. Humans still win.

Table of results from the study

GPT-4 performed on par with junior and intern-level analysts.

In tasks like creating visualizations and extracting data (see “Chart Type” and “Aesthetics” in the table above), GPT-4 performed almost as well as entry-level analysts.Human analysts still outperform GPT-4 on nuanced insights.

Senior analysts provided deeper, more context-rich interpretations of the data. GPT-4 sometimes lacked the ability to interpret complex patterns or offer cautious, assumption-based insights, instead making overly confident predictions.

My interpretation: Humans with senior data/quantitative analysis skills seem to win over the accuracy level of top AI models. However, if you don’t have senior data analysis skills, your personal correctness score might be equal to or lower than the accuracy of current models like GPT-4o or -o1. Something to think about.

NEWS TO KNOW

🧠 OpenAI launched new “o1” model for advanced reasoning

Another thing apparently matching human capability? OpenAI’s new “o1” model.

Why it matters:

The “o1” model outperformed PhD-level experts in chemistry, physics, and biology on the challenging GPQA-diamond intelligence benchmark, becoming the first model to achieve this.

Its performance comes from taking more time to process questions and using reinforcement learning - recognizing it’s own mistakes, and correcting them by shifting reasoning strategies.

Here’s what benchmark testing showed -

Availability: The “o1-preview” and more efficient “o1-mini” are currently accessible in ChatGPT Plus and Team accounts.

Usage Limits: Right now, there are weekly limits of 30 messages for o1-preview and 50 for o1-mini.

However, I’m disappointed (though not surprised) that OpenAI specifically chose to hide the “raw chains of thoughts” behind the scenes of AI’s processing from users.

This means we won’t have visibility into how OpenAI’s model reaches conclusions through smarter reasoning, or ensure it's processing our data in a way that fits our needs and context.

Lastly, a few tools I’m playing around with -

Indigo is a desktop and web app that lets you save prompts and run them in any app without app or tab switching (watch their demo). It’s a similar idea to TextBlaze, but more advanced.

Play by Hyperspace lets you explore a topic by collecting and synthesizing information for you, a bit like Perplexity does. But it enables you to select preferred sources and AI models to generate your own more customized information collection with a mind map included.

Thanks for your attention 🤝 I hope this edition sparks new ideas and sets your October off on the right foot.

See you next time!